The next question one might ask is how should a survey be administered?

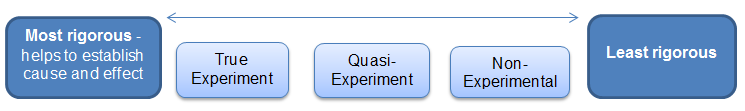

Information on common research and evaluation designs can be found below. The design selected should align with the purpose of evaluation, the type of information to be collected, and the program or organization’s capacity to implement a certain design. This section will explore the different designs that can best help you evaluate your program, starting with a short glossary of common terms.

Common Research Design Terms

Learn more about common research designs:

Common Research Design Types

The following are listed in order of rigor from most to least.

- True Experimental

- Quasi-Experimental

- Nonexperimental

True Experimental Design

In true experimental design, one group gets the intervention or program and another group does not. Participants are randomly assigned to each group, meaning everyone has an equal chance of receiving the intervention. This is important because it improves the likelihood that any changes observed or measured in the participants are due to the intervention or program.

Qualities of a True Experimental Design

- Random selection

- Includes a control group

- Helps to establish cause and effect

- Considered the most rigorous type of research design

Quasi-Experimental Design

This design is often used when randomization of participants to an intervention or control group is not feasible or practical.

Qualities of a Quasi-Experimental Design

- Does not include randomization of participants

- May include a comparison group

- Individuals in the control or comparison group may have similar qualities and characteristics as the individuals in the intervention group

Nonexperimental Design

This type of design is often used when random assignment is not possible and a control or comparison group is not available or practical. If a change in participants is measured or observed, this design does not necessarily tell us what caused the change in program participants, only that a change occurred.

Qualities of a Nonexperimental Design

- Does not include randomization of participants

- May not include a control or comparison group

- Feasible and practical to implement in a real program

- Cost-effective

To learn more about threats to validity in research designs, read the following page: Threats to evaluation design validity

Common Evaluation Designs

Most program evaluation plans fall somewhere on the spectrum between quasi-experimental and nonexperimental design. This is often the case because randomization may not be feasible in applied settings. Similarly, establishing a true experimental design can be costly and time-consuming. The design should best fit the evaluation needs of the study and the capacity of the organization.

Illustrations of specific evaluation designs follow that may help with decisions on how to structure data collection efforts. Most of these designs, depending on the specifics, would be either quasi-experimental or nonexperimental.

There are two designs commonly used in program evaluation: posttest design and pretest/posttest design. Both can be used with one group or integrate another group as a comparison.

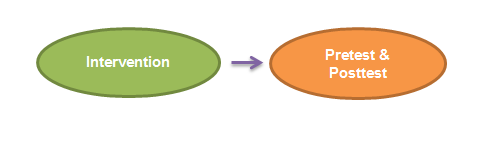

Posttest Only Design

A posttest only design is simple, straightforward, and can be done with one group (no comparison group) or two groups (with a comparison group) of participants. Participants receive an intervention and are tested afterwards.

One-Group Posttest Only Design in Practice

A company wants to know whether its employees benefited from a recent employee training conference (listed below as “intervention”). Following the implementation of the training conference, employees were given a short survey (posttest) to assess their satisfaction with the conference. The surveys were used to provide feedback and make changes to next year’s conference.

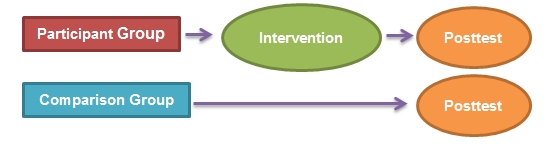

Two-Group Posttest Only Design in Practice

A posttest is administered to two groups to look at the relationship between abstinence education and positive youth development. One group (comparison group) does not participate in an after-school abstinence program. Another group (participant group) participates in the abstinence education program. Both groups receive a survey that measures positive youth development (posttest). The scores on the posttest are compared to see if the abstinence education program had an impact on positive youth development for those attending the program.

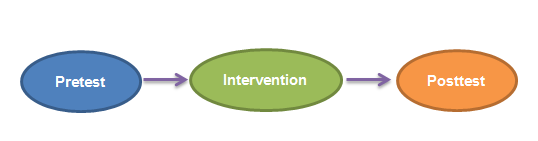

Pretest/Posttest Design

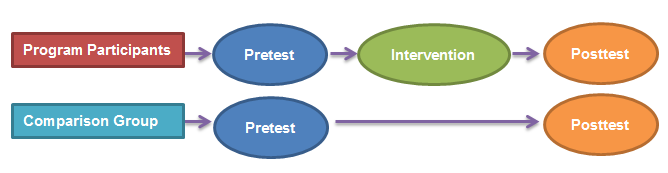

This type of design can also be done with or without a comparison group. Participants receive a pretest, receive the intervention, and are tested afterward.

One-Group Pretest/Posttest Design in Practice

The pretest/posttest design may be used for evaluating the impact of a training program on workplace safety in office employees.

Ex. Employees are given a survey (pretest) to assess their knowledge of workplace safety. Then, they are asked to take a two-hour training addressing work safety protocols (intervention). Following the training, they receive another survey assessing their knowledge of workplace safety (posttest).

Two-Group Pretest/Posttest Design in Practice

An afterschool program is interested in seeing if participation in their civic engagement curriculum (independent variable) can actually lead to improved efficacy in youth participants. They select two youth groups and assign one group to the civic engagement curriculum (participant group) while the other group receives no program (comparison group). A pretest is given to both groups. The program participants receive the curriculum for three months while the comparison group does not. Both groups are then given a posttest, and the results are compared.

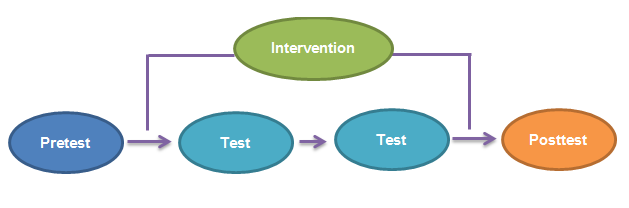

Time Series Design

A variation of the pretest/posttest design, this design collects data over regular intervals of time and aims to assess an intervention or program before, during, and after the course of the intervention or program. A time series design may integrate a control or comparison group. If so, it may more closely resemble a quasi-experimental or experimental design.

Time Series Design in Practice

A program wishes to examine motivation levels in their empowerment course to see if participants who attend the course (intervention) increase their levels of motivation. They develop a survey to assess motivation levels. The survey is administered once before the program (pretest), three months after program start (test), six months after program start (test), and finally at the end of one year following the conclusion of the program (posttest). The test scores at different time points are analyzed to look at the overall effect of the program.

Retrospective Design

A retrospective design could be considered another variation of the pretest/posttest design. In a retrospective design, the participant takes both a pretest and a posttest after the intervention is complete. Often this type of design assesses changes in skills, attitudes or behaviors. Participants may be asked how they felt about their skills before they started the intervention/program and how they feel about their skills now. This design can be cost-effective and reduce survey burden on participants.

Retrospective Design in Practice

A program coordinator wants to determine how participating in a one-day health clinic and prevention workshop for homeless youth (intervention) has impacted youth knowledge about specific health risks. To do so, program staff administer a retrospective pretest survey to assess whether participants’ knowledge about health risks changed after participating in the one-day event. Participants are asked to complete questions about their previous and current knowledge of health risks in one form at the conclusion of the day’s activities.