When developing surveys and other evaluation instruments, it is important to understand the different types of questions and which to use to obtain the most accurate results from each study. Consider the following when determining how to design evaluation instruments.

Survey Development Tips

What Kind of Information Is Needed?

| Attributes | Beliefs, Attitudes, Opinions | Knowledge | Behavior |

|---|---|---|---|

| Who people are—personal or demographic characteristics | Perceptions people hold or psychological states | What people know; how well they understand something | What people do, have done in the past, or plan to do in the future |

What Types of Questions Make the Most Sense?

| Open-Ended Questions | Closed-Ended Questions |

|---|---|

| Respondents provide their own answers | Respondents select responses from a list of options |

How Should the Questions Be Worded?

| Use simple wording | Avoid jargon and abbreviations |

| Be specific | Be clear |

| Avoid assumptions | Consider language, reading level, and age |

| Avoid double-barreled questions | Use complete sentences |

| Select clear and logical response categories | Avoid bias in questions |

| Make instructions clear | Avoid long questions |

All surveys are not created equal. Whether using a preexisting survey, developing one, or employing a combination of the two, it is important to consider the qualities of a good survey. Determining the appropriateness and effectiveness of your survey is essential. The following will help explain different strengths and weaknesses so you can make informed decisions about using the right survey.

Psychometrics

Psychometrics is a field of study that addresses psychological measurement, which includes survey or questionnaire development. Two key concepts in psychometrics include validity (i.e., whether or not a survey measures what it intends to measure) and reliability (i.e., whether a specific survey accurately measures a given construct). Understanding these psychometric concepts can help researchers and evaluators select or develop appropriate instruments and identify whether their questionnaire is performing as intended. Each key element will be described in detail below.

Quick Facts About Psychometrics

- Includes techniques for correctly measuring constructs such as knowledge or opinions

- Involves construction of surveys, assessments, or tests

Ways of Ensuring Survey Credibility

Reliability

Reliability refers to a survey’s ability to produce consistent results when repeatedly measuring the same outcome.

Understanding reliability can help verify that a survey will generate the same or similar results across time and social contexts. The reliability of a survey can be assessed through several procedures; the most common ways to ensure reliability are listed below.

Checks for Reliability

| Test-Retest | Involves testing and retesting the same set of people on the same set of test items to examine the consistency of their responses |

| Interrater | Looks at the extent to which trained raters agree when using the same survey |

| Internal Consistency | Involves the cohesiveness of a scale’s items. Do all the items in the scale measure the same characteristic or concept? Can they be logically grouped together? |

Quick Facts About Reliability

- Checks if a measure produces the same results, time and again

- Uses repeated tests with the same survey to determine the consistency of the survey

Validity

Validity is the extent to which a survey has correctly captured the concept of interest.

In applied research and evaluation, using a valid survey is particularly important because results from data can be used to inform programming decisions. Listed below are two of the major types of validity to look for in surveys, internal and external validity. It is important to be familiar with both types of validity to choose the best survey possible and understand the limitations of an evaluation or research.

Measures of Validity

| Description | Example | |

|---|---|---|

| Internal Validity | The degree to which a survey correctly measures what it intended to measure | You run an after-school program and want to use a survey to measure students’ perceptions about school. To ensure internal validity, make sure the survey questions specifically measure students’ feelings about school not how they feel about the after-school program or just how they feel that day. Be sure the questions asked measure what they are intended to measure and that the survey as a whole measures what it is intended to measure. |

| External Validity | The ability of the survey to create generalizable results | Asking youth in a large after-school program how many other youth in their program they talk to each day might be an accurate measure of social networks in that program, but the findings may not be an accurate measure of social networks in a very small program. |

Quick Facts About Validity

- Addresses whether a survey actually reflects the measure it is trying to assess

- May look at the approximation of results to the actual situation

- Highlights the importance of gathering the right information with your survey

An Ideal Survey Is Both Reliable and Valid

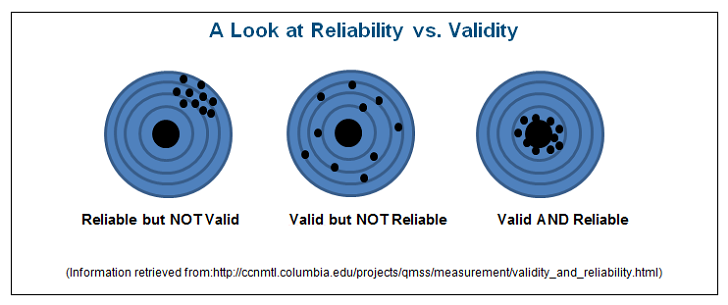

Imagine that you have collected survey data on civic engagement in an after-school program. In the illustration below, each dot represents an observed or measured score, and the bulls-eye represents the actual scores of that population. In the first figure, we see that it is possible for a survey to be reliable but have low validity where participants’ scores consistently miss the mark. It is also possible for a survey to be valid but with low reliability where participants’ scores are inconsistent; however, a total average of the scores reflects the mark. In the last figure, we see that participants’ scores are both consistent and reflect the mark.

Threats to Design Validity

To ensure evaluations and research designs are valid, it is important to understand the threats to the internal or external validity of your evaluation design.

Threats to the internal validity of your study design are factors outside of the program or treatment that could account for results obtained from the evaluation. Ensuring internal validity means you can be more certain that your intervention or program caused the effects observed.

A threat to external validity could be an inaccurate generalization. Threats to external validity are threats to the approximate truth of conclusions.

Common Threats to Internal Validity

Selection Bias

When control and program participants are selected from populations with different characteristics

Attrition or Mortality

When different proportions of participants or different kinds of participants drop out from the control or program groups

History

When external or unanticipated events occur between administration and evaluation of surveys

Maturation

When aging or development of participants occurs

Instrumentation

When aspects of the evaluation survey itself change between pre- and posttest

Common Threats to External Validity

Situational or Contextual Factors

When specific conditions under which the research was conducted limit its generalizability

Pretest or Posttest Effects

Results that can only be found after pretests or posttests

Hawthorne Effects

When participants’ reactions to being studied alters their behavior and therefore the study results

Experimenter Effects

When results are influenced by the researcher’s actions